Affiliations

News

-

05/2025🎉 Two papers accepted to ACL 2025! 🎉

-

05/2025Received the Best Talk Award at 2025 TTIC Student Workshop. 📷 Photos

-

09/2024The "Knowledge in Generative Models" workshop, which I co-organized at ECCV 2024, is a huge success! 📷 Photos

-

09/2024Attended the 2024 Midwest Computer Vision Workshop at Indiana University Bloomington.

-

08/2024Spent an amazing summer at Toyota Research Institute. 📷 Photos

-

06/2024Presented our work Intrinsic-LoRA at CVPR 2024 in Seattle. 📷 Photos

-

04/2024Attended the Multi-University Workshop organized by Toyota Research Institute in Los Altos; co-organized the student panel and served as a panelist. 📷 Photos

-

03/2024Will intern at Toyota Research Institute this summer in Los Altos, working with Dr. Vitor Guizilini.

-

10/2023 -

09/2023Awarded Outstanding TA Award. 📷 Photos

-

02/2023Started internship at Adobe Research. 📷 Photos

Research

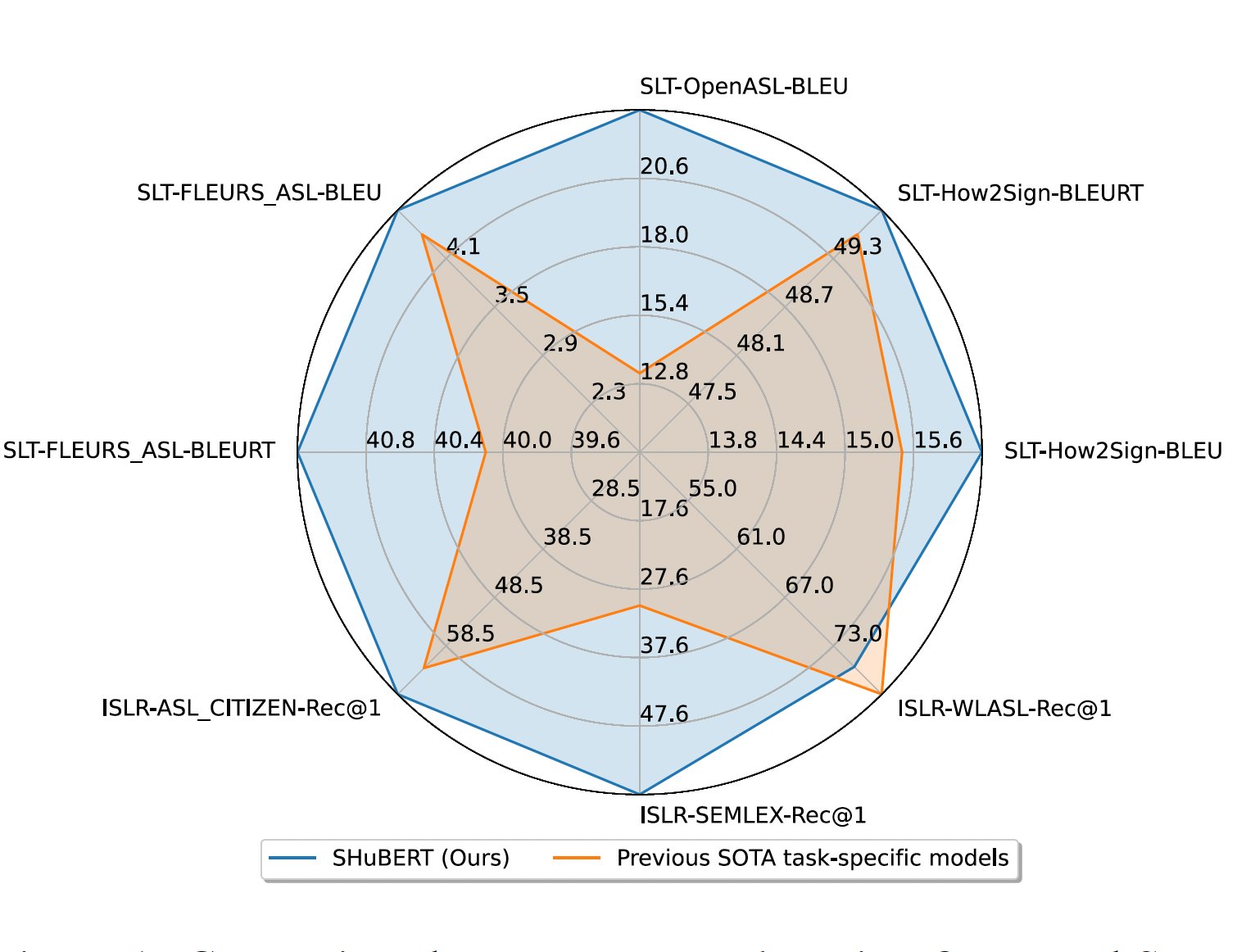

SHuBERT: Self-Supervised Sign Language Representation Learning via Multi-Stream Cluster Prediction

Shester Gueuwou, Xiaodan Du, Greg Shakhnarovich, Karen Livescu, Alexander H. Liu

Project Page

|

arXiv

|

Code

The 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025 Oral)

We introduce SHuBERT, a self-supervised representation learning approach that adapts the masked prediction for multi-stream visual sign language input, learning to predict multiple targets for corresponding to clustered hand, face, and body pose streams. SHuBERT achieves state-of-the-art performance on multiple sign language benchmarks.

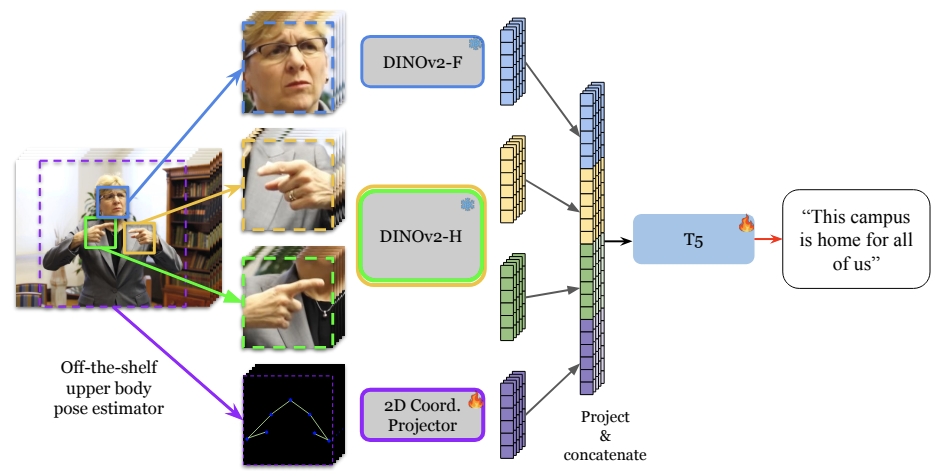

SignMusketeers: An Efficient Multi-Stream Approach for Sign Language Translation at Scale

Shester Gueuwou, Xiaodan Du, Greg Shakhnarovich, Karen Livescu

Project Page

|

arXiv

The 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025)

We introduce SignMusketeers for Sign Language Translation at Scale. Using only 3% of compute, 41x less pretraining data, and 160x less pretraining epochs, it achieves competitive performance(-0.4 BLEU) compared to the recent ASL-English Translation SOTA.

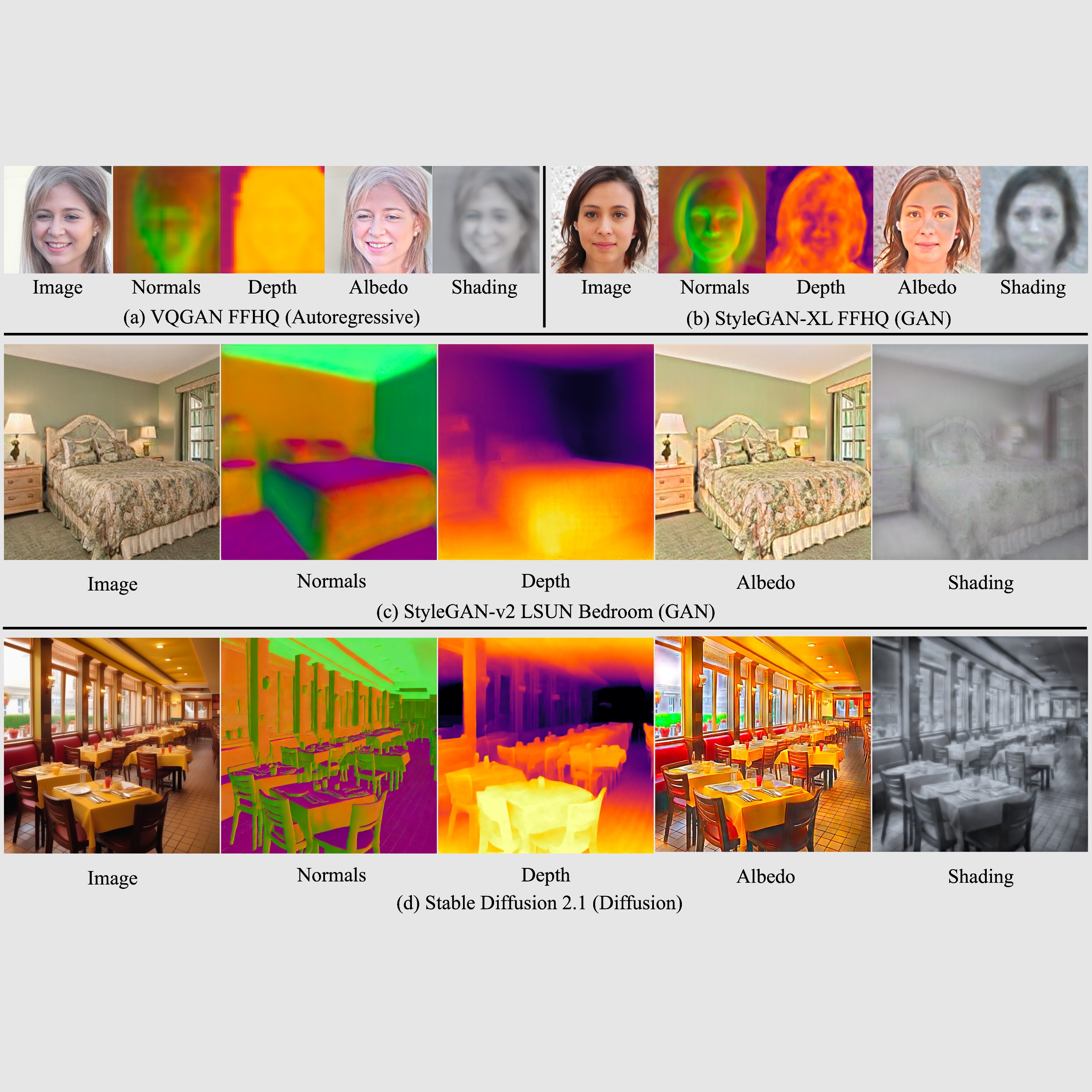

Generative Models: What do they know? Do they know things? Let's find out!

Previous title: Intrinsic LoRA: A Generalist Approach for Discovering Knowledge in Generative Models

Xiaodan Du, Nicholas Kolkin, Greg Shakhnarovich, Anand Bhattad

Project Page

| arXiv

| Code

IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) workshops, 2024

We introduce Intrinsic LoRA (I-LoRA), a universal, plug-and-play approach that transforms any generative model into a scene intrinsic predictor, capable of extracting intrinsic scene maps directly from the original generator network.

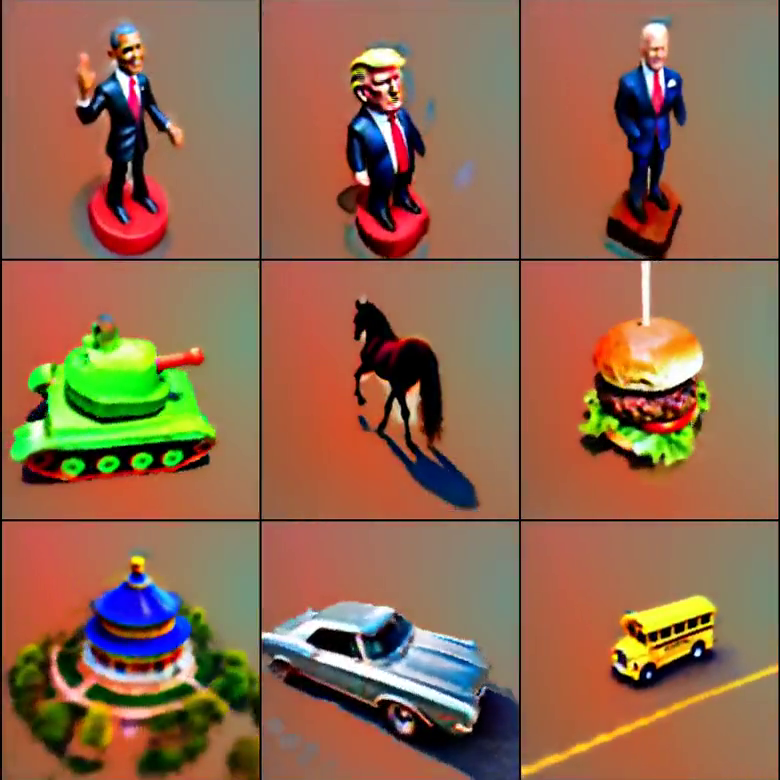

Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation

Haochen Wang*, Xiaodan Du*, Jiahao Li*, Raymond A. Yeh, Greg Shakhnarovich

Project Page

| arXiv

| Code

IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR), 2023

Generating 3D objects from 2D diffusion models by chaining scores with NeRF gradients.

Text-Free Learning of a Natural Language Interface for Pretrained Face Generators

Xiaodan Du, Raymond A. Yeh, Nicholas Kolkin, Eli Shechtman, Greg Shakhnarovich

We propose Fast text2StyleGAN, a natural language interface that adapts pre-trained GANs for text-guided human face synthesis.

Internship Experience

02/2023 - 05/2023

Research Intern

Adobe Research

Supervised by Dr. Nick Kolkin and Dr. Eli Shechtman.

Services

Conference/Workshop Reviewer:

CVPR 2026 (1) | ICLR 2026 (2) | SIGGRAPH 2025 (1) | CVPR 2025 (4) | ICLR 2025 (1) | WACV 2025 (8) | ECCV 2024 (3/4) | CVPR 2024 (/3) | SIGGRAPH 2024 (1)

Workshop Organizer:

09/2024

ECCV 2024 Workshop on Knowledge in Generative Models: co-organizer

04/2024

Toyota Research Institute Multi-University Workshop: student panel co-organizer, panelist